Big Data in <500 Clay Tablets?

The BPS team is exploring the notion that “Big Data” may characterize many humanities research fields, not because of the size of the datasets with which researchers work, but by the way that computational and data-driven methods can transform scholarly workflows. In particular, we believe that prosopography may be properly considered a “Big Data” research field.

- From the perspective of the Humanist:

Observations by Laurie Pearce, project director of BPS and Hellenistic Babylonia: Texts, Images and Names (HBTIN)

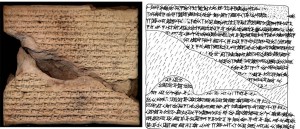

The total number of cuneiform texts cataloged in museums, libraries, and private collections throughout the world is well under 500,000 (cdli.ucla.edu). The number is stunningly large and small. These clay and stone documents, composed in one of the world’s oldest writing systems, provide the first documentation of three and one-half millennia of human activity—economic, religious, intellectual, and scientific. It is remarkable both that so many have survived and, at the same time, frustrating that the uneven distribution of their number over such a long time span means that the study of various times and places often depend on fragmentary data, as in this illustration:

Nonetheless, scholars of the ancient Near East, lands and empires that flourished in modern Iraq, parts of Syria, Turkey, and Iran, ask of the available resources questions recognizable from many other humanities disciplines, and are increasingly turning to methodologies and tools from the digital and social science worlds to frame and answer their research agendas.

An exploration of the remarkable embrace of digital tools by practitioners of this recondite specialty deserves more attention than might be expected, particularly in light of the size of text corpora with which researchers are concerned—from several hundred to ±10,000—and the corresponding (small) digital footprint of such data as presented in a standard off-the-shelf database. Nonetheless, the data and the questions researchers are asking of it suggest that even “Small Data” faces challenges in modeling authority and data review, areas of concern in “Big Data”.

BPS is unique as a tool-kit for prosopography: it emulates the workflow—including, and notably, the uncertainty—of real scholars grappling with data analysis. While the small cuneiform corpus of some 500 texts from the city of Uruk in the 4th-3rd centuries BCE would seem to consign it irrevocably to the realm of “Small Data”, that same size served BPS well as a development corpus as it attempted to capture and replicate in digital tools the human researcher’s analytic process(es) of disambiguation of multiple instances of name-sakes into discrete name instances, even as it allowed for a process of modeling authority, scholarly debate and the communis opinio.

The central problem in prosopography, and thus, in the social network analysis that draws upon it, is the disambiguation of the many individuals who share the same names. Culturally specific naming practices may simplify or complicate the process: kings of England named Henry are numbered VI, VII, VIII, just as men in non-royal families might be called John Sr., John Jr., John III, etc. Disambiguation is made more difficult when, for example, individuals in alternating generations are named for their grandfathers (a practice called papponymy), with the result that a document may record the participation of Anu-uballiṭ, son of Nidintu-Anu, son of Anu-uballiṭ, son of Nidintu-Anu.

The specialist in any corpus knows that clues that inhere in the data and the context in which they appear facilitate disambiguation. Were the specialist asked how he distinguishes between close namesakes, he might claim “intuition”, his expertise so ingrained that it obscures the sequential process considering attributes and the likelihood that any one or a collection of those features applies to one, but not the other, of the namesakes. For example, dates in documents that record two transactions occurring seventy-five years apart would, in some social contexts, make it highly unlikely that Anu-uballiṭ(1) is the same individual as Anu-uballiṭ(2). Other attributes do not provide such clear criteria, and different scholars might variously assess the utility of those measures (and/or meta-data) in disambiguation, effectively assigning them different weights in their evaluation, for example: how likely is a buyer of real-estate also to participate in slave sales? Differences in outcomes are chalked up to scholarly debate, which may range on the continuum from friendly to flame. Scholars committed to the exploration of ideas may annotate the differences and note how their procedures and assumptions led to variant outcomes. Examples include: (a) the inclusion or exclusion of data from different researchers’ data sets, (b) identification of few attestations, appearing in limited contexts, of data across the corpus, (c) discrepancies reflecting lacking or corrupted metadata. These are highlighted in Figure 2.

When these differences are small, few in number, and occur in a small number of texts, dissenters or the merely curious can easily retrace the analytic process that brought assertions and facts together to a result. But when a researcher studies a corpus of 10,000 economic records from temples, and tracks the price of the sale of a liter of barley on specified festival days in several months over a thirty year period in three cult centers, in the hopes of determining the economic prowess of similarly-named members of families of cult officiants, he faces a much greater challenge. The research quickly becomes a complex interplay of layers of data (prices, quantities, days, years and location) associated with relevant individuals who have been disentangled, with varying degrees of likelihood, from multiple namesakes. The researcher’s colleagues may simply choose to accept all of his assertions on the basis of the researcher’s reputation and standing in the field. Alternatively, they might dismiss the same results produced at the hand of a relative unknown, perhaps a recent PhD (even if a student of a legendary professor) or by an interested dilettante.

Common notions of scientific exploration include “reproducible results”, which carries with it the corollary that the data and research method can be tracked and implemented repeatedly. In order to approach that standard in research fields such as prosopography, where degrees of uncertainty factor into the methodology, tracking of the changes and the researchers who introduced them, are a necessary component of responsible practice. A feature of the BPS architecture designed to support probabilistic assertions is the creation of workspaces for individual experimentation, recording of parameters applied in any particular research question, and authority tracking. In this way, BPS engages with one of the questions “Big Data” faces, even as the number of bytes any BPS researcher may process is small.